Calico Cloud Free Tier quickstart guide

This quickstart guide shows you how to connect a Kubernetes cluster to Calico Cloud Free Tier.

You'll learn how to create a cluster with kind, connect that cluster to the Calico Cloud web console, and use observability tools to monitor network traffic.

Before you begin

- You need to sign up for a Calico Cloud Free Tier account.

- You also need to install a few tools to complete this tutorial:

kind. This is what you'll use to create a cluster on your workstation. For installation instructions, see thekinddocumentation.- Docker Engine or Docker Desktop.

This is required to run containers for the

kindutility. For installation instructions, see the Docker documentation. kubectl. This is the tool you'll use to interact with your cluster. For installation instructions, see the Kubernetes documentation

Step 1: Create a cluster

In this step, you will:

- Create a cluster: Use

kindto create a Kubernetes cluster. - Verify the cluster: Check that the cluster is running and ready.

-

Create a file called

config.yamland give it the following content:kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

networking:

disableDefaultCNI: true

podSubnet: 192.168.0.0/16This configuration file tells

kindto create a cluster with one control-plane node and two worker nodes. It instructskindto create the cluster without a CNI. ThepodSubnetrange defines the IP addresses that Kubernetes will use for pods. -

Start your Kubernetes cluster with the configuration file by running the following command:

kind create cluster --name=calico-cluster --config=config.yamlkindreads you configuration file and creates a cluster in a few minutes.Expected outputCreating cluster "calico-cluster" ...

✓ Ensuring node image (kindest/node:v1.29.2) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-calico-cluster"

You can now use your cluster with:

kubectl cluster-info --context kind-calico-cluster

Thanks for using kind! 😊 -

To verify that your cluster is working, run the following command:

kubectl get nodesYou should see three nodes with the name you gave the cluster.

Expected outputNAME STATUS ROLES AGE VERSION

calico-cluster-control-plane NotReady control-plane 5m46s v1.29.2

calico-cluster-worker NotReady <none> 5m23s v1.29.2

calico-cluster-worker2 NotReady <none> 5m22s v1.29.2Don't wait for the nodes to get a

Readystatus. They remain in aNotReadystatus until you configure networking in the next step.

Step 2. Install Calico

In this step, you will install Calico in your cluster.

-

Install the Tigera Operator and custom resource definitions.

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.31.2/manifests/tigera-operator.yamlExpected outputnamespace/tigera-operator created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator-secrets created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

rolebinding.rbac.authorization.k8s.io/tigera-operator-secrets created

deployment.apps/tigera-operator created -

Install Calico Cloud by creating the necessary custom resources.

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.31.2/manifests/custom-resources.yamlExpected outputinstallation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

goldmane.operator.tigera.io/default created

whisker.operator.tigera.io/default created -

Monitor the deployment by running the following command:

watch kubectl get tigerastatusAfter a few minutes, all the Calico components display

Truein theAVAILABLEcolumn.Expected outputNAME AVAILABLE PROGRESSING DEGRADED SINCE

apiserver True False False 4m9s

calico True False False 3m29s

goldmane True False False 3m39s

ippools True False False 6m4s

whisker True False False 3m19s

Step 3. Connect to Calico Cloud Free Tier

In this step, you will connect your cluster to Calico Cloud Free Tier.

-

Sign in to the Calico Cloud web console and click the Connect a cluster button on the welcome screen.

-

Follow the prompts to create a name for your cluster (for example,

quickstart-cluster) and copy akubectlcommand to run in your cluster.What's happening in this command?

This command creates three resources in your cluster:

- A

ManagementClusterConnectionresource. This resource specifies the address of the Calico Cloud management cluster. - A

Secretresource (tigera-managed-cluster-connection). This resource provides certificates for secure communication between your cluster and the Calico Cloud management cluster. - A

Secretresource (tigera-voltron-linseed-certs-public). This resource provides certificates for secure communications for the specific components that Calico Cloud uses for log data and observability.

Example of generated kubectl command to connect a cluster to Calico Cloud Free Tierkubectl apply -f - <<EOF

# Once applied to your managed cluster, a deployment is created to establish a secure tcp connection

# with the management cluster.

apiVersion: operator.tigera.io/v1

kind: ManagementClusterConnection

metadata:

name: tigera-secure

spec:

# ManagementClusterAddr should be the externally reachable address to which your managed cluster

# will connect. Valid examples are: "0.0.0.0:31000", "example.com:32000", "[::1]:32500"

managementClusterAddr: "oss-ui-01-management.calicocloud.io:443"

---

apiVersion: v1

kind: Secret

metadata:

name: tigera-managed-cluster-connection

namespace: tigera-operator

type: Opaque

data:

# This is the certificate of the management cluster side of the tunnel.

management-cluster.crt: ...

# The certificate and private key that are created and signed by the CA in the management cluster.

managed-cluster.crt: ...

managed-cluster.key: ...

---

apiVersion: v1

kind: Secret

metadata:

name: tigera-voltron-linseed-certs-public

namespace: tigera-operator

type: Opaque

data:

tls.crt: ...

EOF - A

-

To start the connection process, run the

kubectlcommand in your terminal.Example outputmanagementclusterconnection.operator.tigera.io/tigera-secure created

secret/tigera-managed-cluster-connection created

secret/tigera-voltron-linseed-certs-public created -

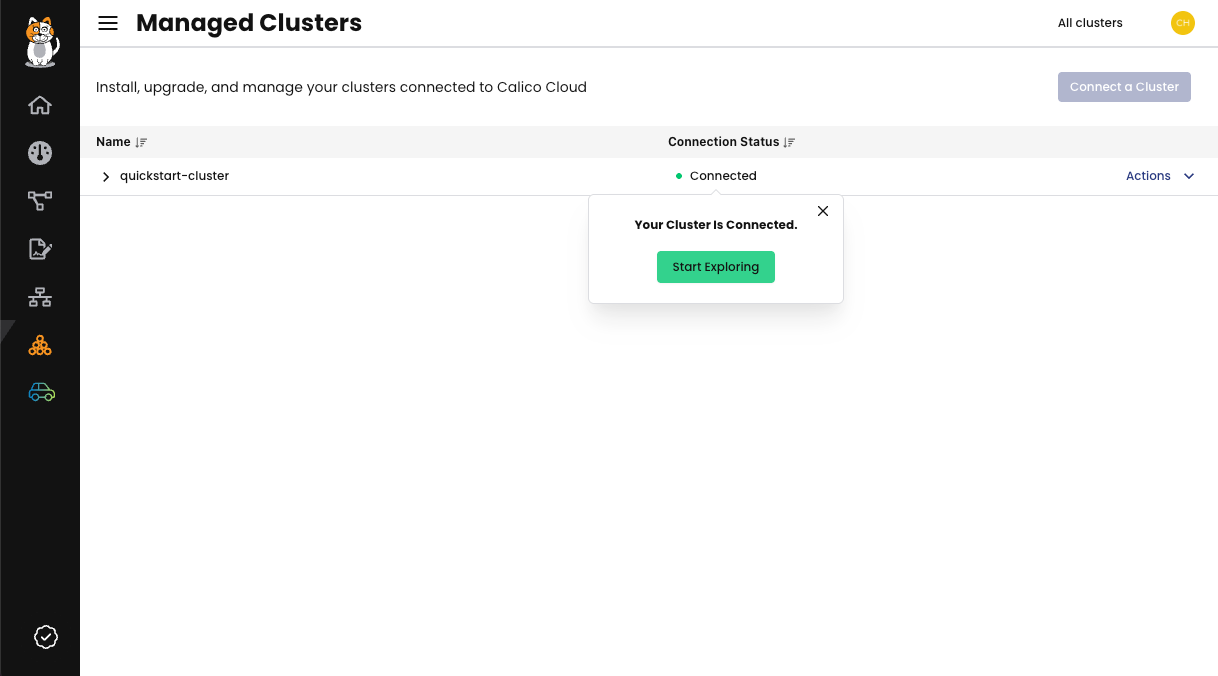

Back in your browser, click I applied the manifest to close the dialog. Your new cluster connection appears in the Managed Clusters page.

Figure 1: A screenshot of the Managed Clusters page showing the new cluster connection.

-

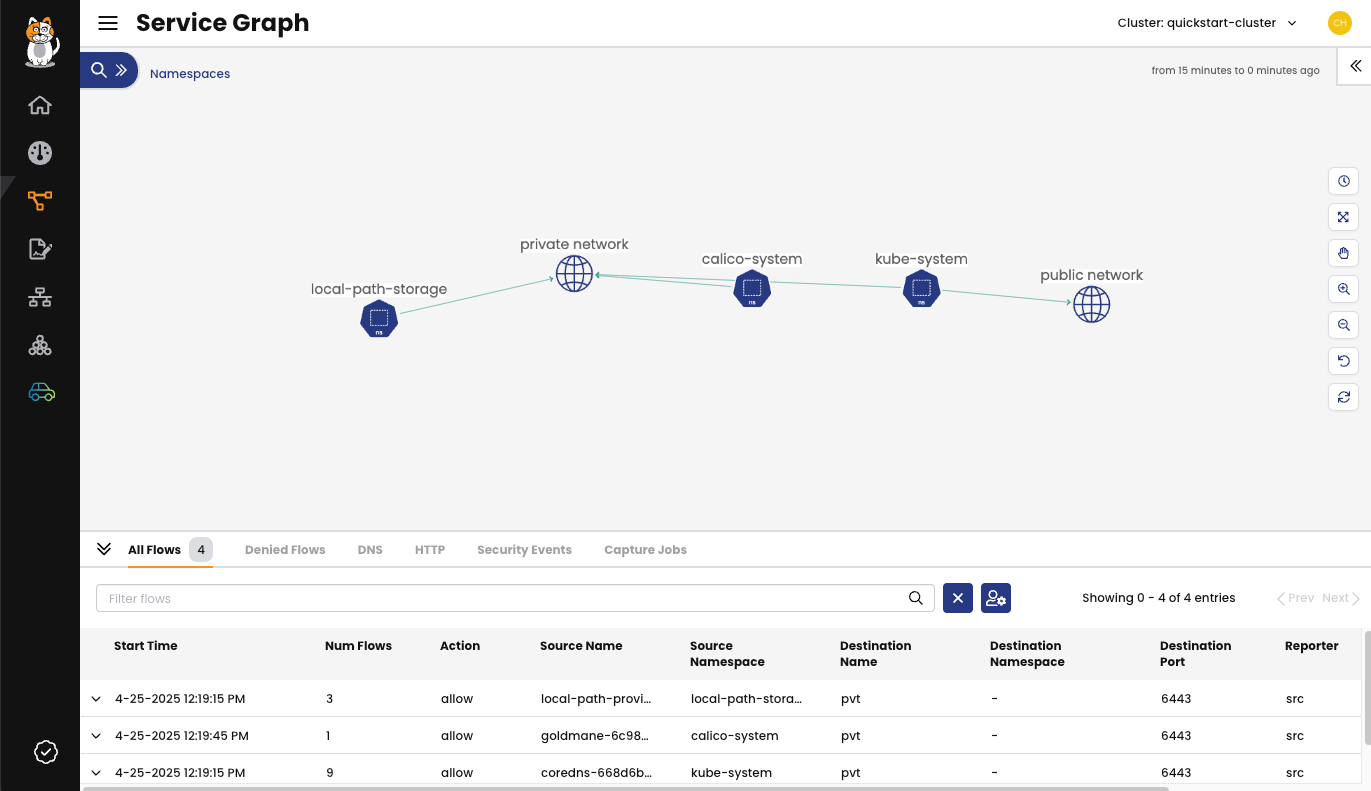

On the left side of the console, click Service Graph to view the Service Graph, which we'll be using to view your network traffic in this tutorial. What you see is a dynamic diagram of the namespaces in your cluster and the connections between them. For now, it shows only system namespaces.

Figure 2: A screenshot of the Service Graph showing system namespaces.

We'll return to this page to see the traffic after we deploy a sample application in the next step.

Step 4. Deploy NGINX and BusyBox to generate traffic

Now it's time to generate some network traffic. We'll do this first by deploying an NGINX server and exposing it as a service in the cluster. Then we'll make HTTP requests from another pod in the cluster to the NGINX server and to an external website. For this we'll use the BusyBox utility.

In this step, you will:

- Create a server: Deploy an NGINX web server in your Kubernetes cluster.

- Expose the server: Make the NGINX server accessible within the cluster.

- Test connectivity: Use a BusyBox pod to verify connections to the NGINX server and the public internet.

-

Create a namespace for your application:

kubectl create namespace quickstartExpected outputnamespace/quickstart created -

Deploy an NGINX web server in the

quickstartnamespace:kubectl create deployment --namespace=quickstart nginx --image=nginxExpected outputdeployment.apps/nginx created -

Expose the NGINX deployment to make it accessible within the cluster:

kubectl expose --namespace=quickstart deployment nginx --port=80Expected outputservice/nginx exposed -

Start a BusyBox session to test whether you can access the NGINX server.

kubectl run --namespace=quickstart access --rm -ti --image busybox /bin/shThis command creates a BusyBox pod inside the

quickstartnamespace and starts a shell session inside the pod.Expected outputIf you don't see a command prompt, try pressing enter.

/ # -

In the BusyBox shell, run the following command to test communication with the NGINX server:

wget -qO- http://nginxYou should see the HTML content of the NGINX welcome page.

Expected output<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>This confirms that the BusyBox pod can access the NGINX server.

-

In the Busybox shell, run the following command test communication with the public internet:

wget -qO- https://docs.tigera.io/pod-connection-test.txtYou should see the content of the file

pod-connectivity-test.txt.Expected outputYou successfully connected to https://docs.tigera.io/pod-connection-test.txt.This confirms that the BusyBox pod can access the public internet.

-

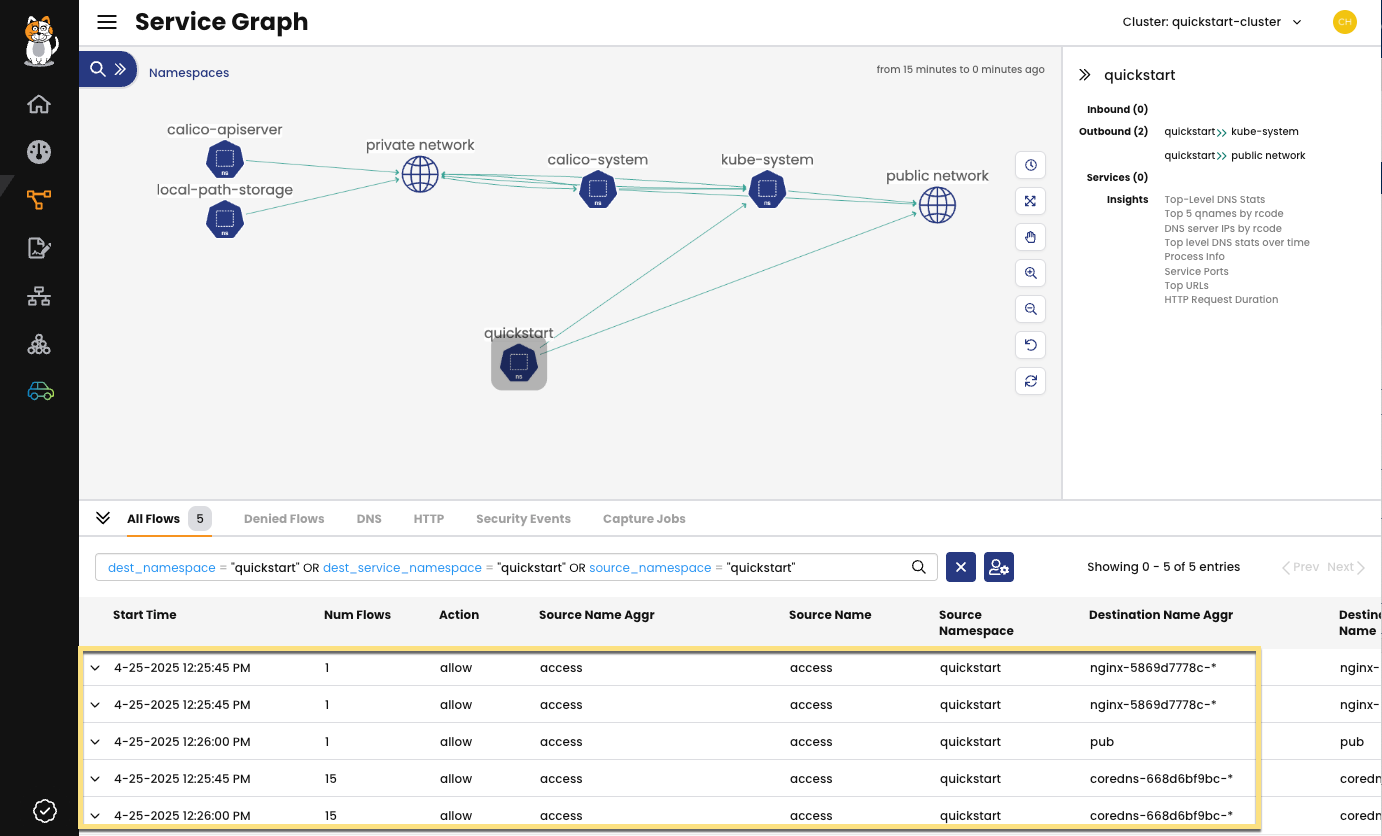

In the web console, go to the Service Graph page to view your flow logs. It may take up to 5 minutes for the flows to appear. When they appear, click the

quickstartnamespace in the Service Graph to filter the view to show the flows only in that namespace. In the list of flows, you should see three new connection types: one tocorednsone tonginx, and another topub, meaning "public network".

Figure 3: Service Graph with

quickstartnamepace selected showing flows to NGINX and public network.

Step 5. Restrict all traffic with a default deny policy

To effectively secure your cluster, it's best to start by denying all traffic, and then gradually allowing only the necessary traffic. We'll do this by applying a Global Calico Network Policy that denies all ingress and egress traffic by default.

In this step, you will:

- Implement a global default deny policy: Use a Global Calico Network Policy to deny all ingress and egress traffic by default.

- Verify access is denied: Use your BusyBox pod to confirm that the policy is working as expected.

-

Create a Global Calico Network Policy to deny all traffic except for the necessary system namespaces:

kubectl create -f - <<EOF

apiVersion: projectcalico.org/v3

kind: GlobalNetworkPolicy

metadata:

name: default-deny

spec:

selector: projectcalico.org/namespace not in {'kube-system', 'calico-system', 'calico-apiserver'}

types:

- Ingress

- Egress

EOFExpected outputglobalnetworkpolicy.projectcalico.org/default-deny created -

Now go back to your BusyBox shell and test access to the NGINX server again:

wget -qO- http://nginxYou should see the following output, indicating that access is denied:

Expected outputwget: bad address 'nginx' -

Test access to the public internet again:

wget -qO- https://docs.tigera.io/pod-connection-test.txtYou should see the following output, indicating that egress traffic is also denied:

Expected outputwget: bad address 'docs.tigera.io' -

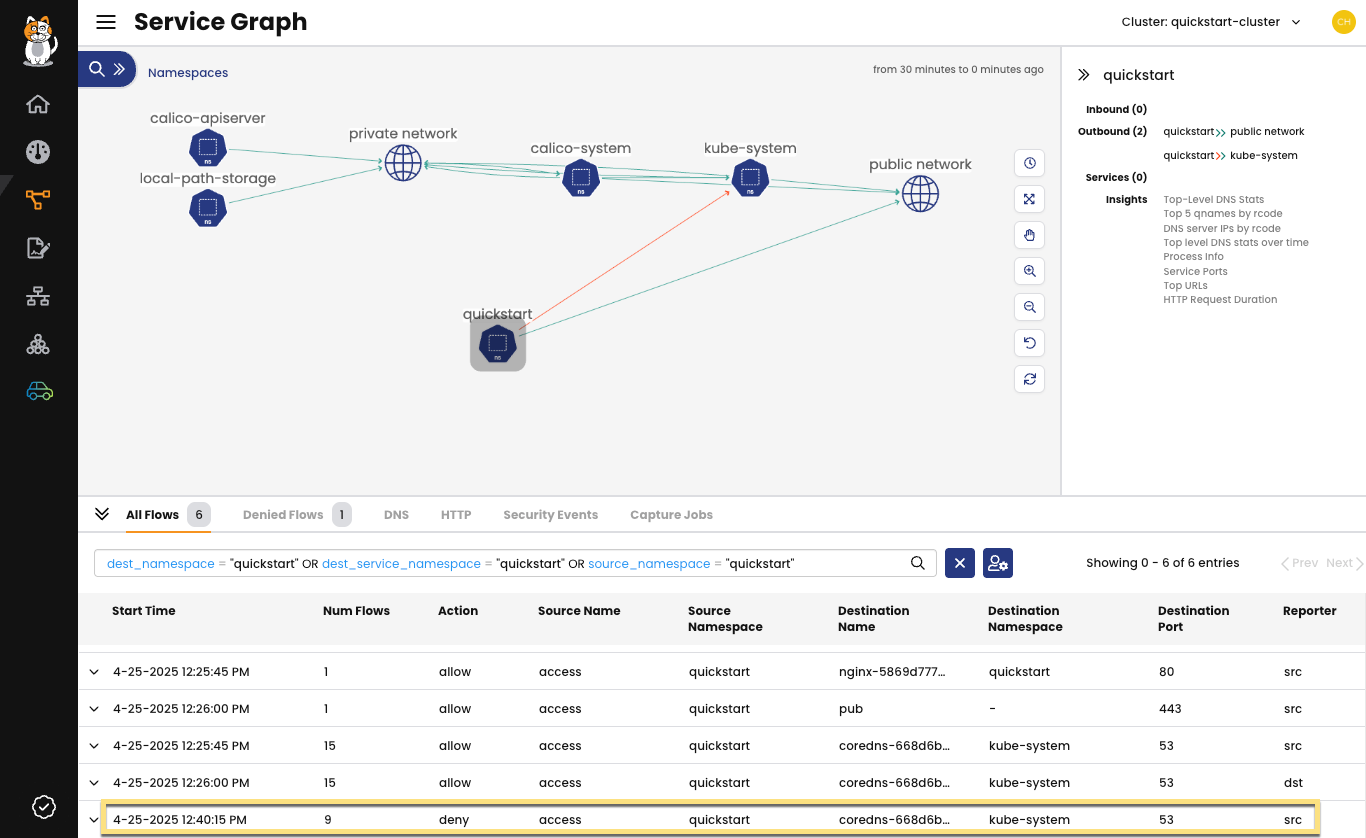

Return to your browser to see the denied flow logs appear in the Service Graph. You should see two denied flows to

coredns.

Figure 4: Service Graph showing denied flows to

coredns.By following these steps, you have successfully implemented a global default deny policy and verified that it is working as expected.

Step 6. Create targeted network policy for allowed traffic

Now that you have a default deny policy in place, you need to create specific policies to allow only the necessary traffic for your applications to function.

The default-deny policy blocks all ingress and egress traffic for pods not in system namespaces, including our access (BusyBox) and nginx pods in the quickstart namespace.

In this step, you will:

- Allow egress traffic from BusyBox Create a network policy to allow egress traffic from the BusyBox pod to the public internet.

- Allow ingress traffic to NGINX Create a network policy to allow ingress traffic to the NGINX server.

-

Create a Calico network policy in the

quickstartnamespace that selects theaccesspod and allows all egress traffic from it.kubectl create -f - <<EOF

apiVersion: projectcalico.org/v3

kind: NetworkPolicy

metadata:

name: allow-busybox-egress

namespace: quickstart

spec:

selector: run == 'access'

types:

- Egress

egress:

- action: Allow

EOFExpected outputnetworkpolicy.projectcalico.org/allow-busybox-egress created -

Back in the BusyBox shell, test access to the public internet again. Because egress traffic is now allowed from this pod, this should succeed:

wget -qO- https://docs.tigera.io/pod-connection-test.txtExpected outputYou successfully connected to https://docs.tigera.io/pod-connection-test.txt. -

Test access to the NGINX server again. Egress from the

accesspod is allowed by the new policy, but ingress to thenginxpod is still blocked by thedefault-denypolicy. This request should fail.wget -qO- http://nginxExpected outputwget: bad address 'nginx' -

Create another Calico network policy in the

quickstartnamespace. This policy selects thenginxpods (using the labelapp=nginx) and allows ingress traffic specifically from pods with the labelrun=access.kubectl create -f - <<EOF

apiVersion: projectcalico.org/v3

kind: NetworkPolicy

metadata:

name: allow-nginx-ingress

namespace: quickstart

spec:

selector: app == 'nginx'

types:

- Ingress

ingress:

- action: Allow

source:

selector: run == 'access'

EOFExpected outputnetworkpolicy.org/allow-nginx-ingress created -

Test access to the NGINX server again. From the BusyBox shell, test access to the NGINX server one more time:

wget -qO- http://nginxNow that both egress from

accessand ingress tonginx(fromaccess) are allowed, the connection should succeed. You'll see the NGINX welcome page:Expected output<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

You have now successfully implemented a default deny policy while allowing only the necessary traffic for your applications to function.

Step 7. Clean up

-

To delete the cluster, run the following command:

kind delete cluster --name=calico-clusterExpected outputDeleted cluster: calico-cluster -

To remove the cluster from Calico Cloud, go to the Managed Clusters page. Click Actions > Disconnect, and in the confirmation dialog, click I ran the commands. (Ordinarily you would run the commands from the dialog, but since you deleted the cluster already, you don't need to do this.)

-

Click Actions > Remove to fully remove the cluster from Calico Cloud. You can now connect another cluster to make use of the observability tools.

Additional resources

- To view requirements and connect another cluster, see Connect a cluster to Calico Cloud Free Tier.